Difference between revisions of "AJAX WebMapping Vector Rendering Design"

| (37 intermediate revisions by 6 users not shown) | |||

| Line 2: | Line 2: | ||

This is not supposed to be the final design, but rather draft to be used to facilitate further discussions. | This is not supposed to be the final design, but rather draft to be used to facilitate further discussions. | ||

My hope is that the final version of this design will be used by all the AJAX webmapping clients to make sharing the same code base easier. | My hope is that the final version of this design will be used by all the AJAX webmapping clients to make sharing the same code base easier. | ||

| − | I'd like to credit Patrice Cappelaere who has SVG/VML rendering working in Mapbuilder and deployed at GeoBliki. This design aims to extend Patrice's code to be more generic and extensible. | + | I'd like to credit Patrice Cappelaere who has SVG/VML rendering working in Mapbuilder and deployed at [http://eo1.geobliki.com/wfs/map GeoBliki]. This design aims to extend Patrice's code to be more generic and extensible. |

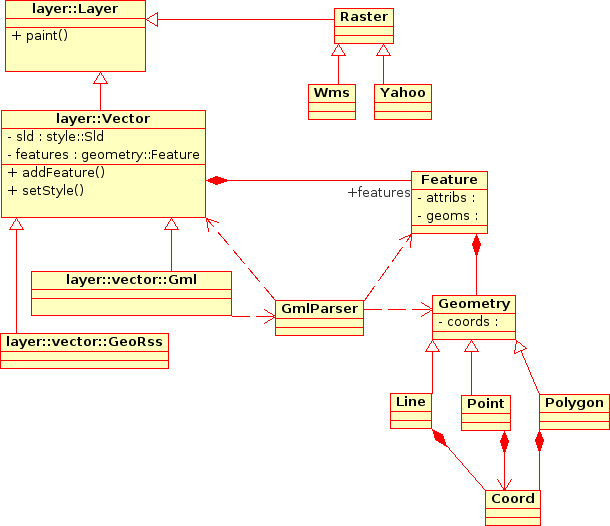

==Vector Layers Class Diagram== | ==Vector Layers Class Diagram== | ||

| + | [[Image:VectorLayers.png]] | ||

| + | |||

| + | The '''layer/Vector''' class is responsible for storing a list of Features and Styles. It calls a Parser to load Features as required (eg for a Web Feature Service), and initiates rendering by calling Renderer. | ||

| + | |||

| + | ===Simplification of features=== | ||

| + | As a map is zoomed out, the number of features that needs to be rendering increases significantly - to the point where a browser becomes too slow, or crashes. | ||

| + | |||

| + | A common solution is to use a different FeatureCollection for different zoomLevels. '''layer/Vector''' should store the source URL of different layers and the zoolLevels appropriate for each featureCollection. | ||

| + | ===Comments=== | ||

| + | # Cameron Shorter: It might be better to move the array of features out of layer/Vector and into a FeatureCollection. | ||

| + | |||

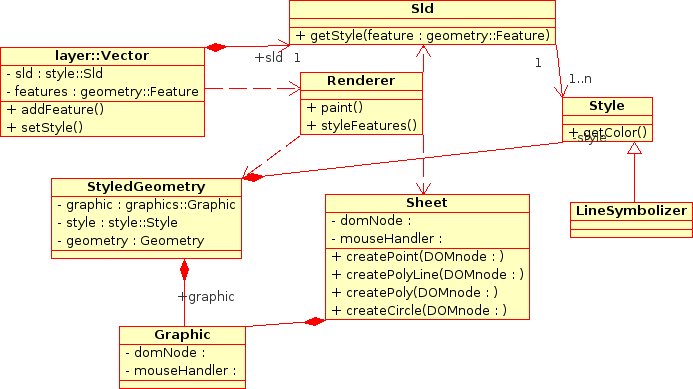

| + | ==Styling Class Diagram== | ||

| + | [[Image:Styling.png]] | ||

| + | |||

| + | '''Renderer''' is responsible for: | ||

| + | # Creating '''StyledGeometry''' for each feature. A StyledGeometry's is determined by parsing the SLD for each Feature. | ||

| + | # Rendering each feature by done by calling Sheet.createGraphic(). | ||

| + | |||

| + | There are plenty of opportunities for optimizing rendering, and this logic belongs in the Renderer. Eg: | ||

| + | * Only features being displayed should be renderered. (Filter by bounding box). | ||

| + | |||

| + | ===Comments=== | ||

| + | |||

| + | Cameron Shorter: '''StyledGeometry''' should probably be merged into '''Feature'''. There is a 1:1 relationship between the 2. | ||

| − | |||

| − | |||

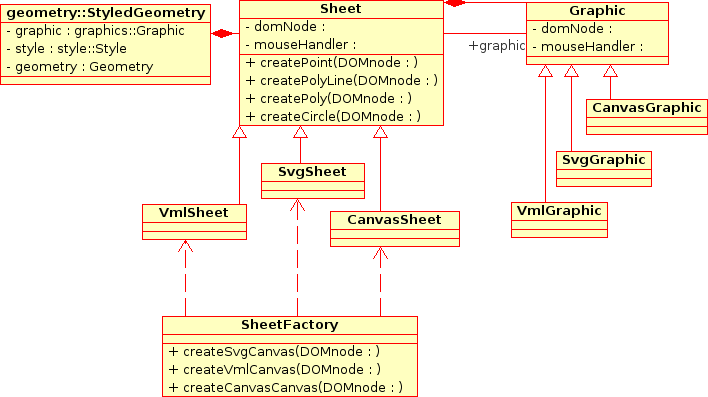

==Graphics Class Diagram== | ==Graphics Class Diagram== | ||

| + | [[Image:Graphics.png]] | ||

| + | |||

| + | Sheet and Graphic represents graphic primitives. They deal directly in pixel coordinates and know nothing about the spatial objects they represent. | ||

| + | |||

| + | '''Sheet''' represents a vector rendering engine and is responsible for creating shapes. | ||

| + | |||

| + | '''Graphic''' represents a graphic shape (line, polygon, circle, etc). It points to a physical shape in the HTML DOM. | ||

| + | |||

| + | ===Renderer Characteristics=== | ||

| + | '''SVG''' SVG is inbuilt into Firefox 1.5+, ie doesn't require a pluggin. Not provided in IE 6.0. SVG allows mouseEvents to be attached to shapes. 10,000 features takes ~ 40 secs to render. (MSD3 dataset) | ||

| + | |||

| + | '''VML''' VML is similar to SVG, provided with IE 6.0 (& earlier?). VML allows mouseEvents to be attached to shapes. 10,000 features takes ~ 8 mins to render. | ||

| + | |||

| + | '''Canvas''' Canvas is provided with Firefox 2.5+. Is reportedly faster than SVG. But you cannot attach mouseEvents to features. | ||

| + | |||

| + | '''WZ Graphics'''A library available for older browsers which draws a line by drawing hundreds of colored DIV tags. This is limited by the size of the data it can render. Suitable for ~ 50 lines, ~ 1000 points. | ||

| + | |||

| + | ===Attaching MouseEvents to Features=== | ||

| + | This functionality makes it easier to select a feature by clicking on it. The work around (for Canvas) will require a search algorithm to be written in JS to search through all the features. | ||

| + | ===Rendering Libraries=== | ||

There are opportunities for collaboration with other projects/libraries in this Graphics package. | There are opportunities for collaboration with other projects/libraries in this Graphics package. | ||

In particular: | In particular: | ||

* This Graphics library should be able to stand alone as a graphics rendering library, independant of the Geospatial side of the code. It can then be packaged up and used for other AJAX clients requiring Vector rendering graphics. | * This Graphics library should be able to stand alone as a graphics rendering library, independant of the Geospatial side of the code. It can then be packaged up and used for other AJAX clients requiring Vector rendering graphics. | ||

| − | * ExplorerCanvas is a cross browser Canvas library. | + | * ExplorerCanvas is a cross browser Canvas library. Supports Canvas for firefox and VML. I don't think it supports attaching mouse events to features. |

| − | * http://starkravingfinkle.org/blog/2006/03/svg-in-ie is worth watching too. | + | * Dojo has a cross VML/SVG library dojo.gfx . A demo at [http://archive.dojotoolkit.org/nightly/demos/gfx/circles.html]. They have talk of extending support to other renderers. The dojo project has a strong community behind it and is worth considering. |

| + | * [http://starkravingfinkle.org/blog/2006/03/svg-in-ie http://starkravingfinkle.org/blog/2006/03/svg-in-ie] is worth watching too. | ||

| + | |||

| + | ===Comments=== | ||

| + | # Jody Garnett: can look into the Go-1 specification for langauge to use. Ie you cannot handle a raw SVG element produced from a Geometry, you will need to decimate it based on the current display DPI. Go-1 provides the idea of a "Graphic" which is produced by processing the Feature+SLD into a Shape+Style. Using SVG (with the feature attirbutes boiled down to some style cues) and the feature id you could have both a nice display visually identical to looking at the complete feature, and enough information to request the feature attribtues when an SVG shape is clicked on. Note: you can build a lazy mapping from ID to a name attributes for tooltip purpose if you need. | ||

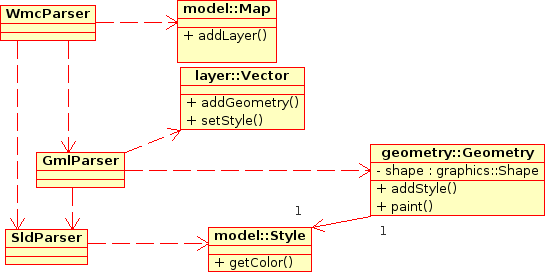

==Parsers Class Diagram== | ==Parsers Class Diagram== | ||

| + | [[Image:Parsers.png]] | ||

| + | |||

| + | The Parser objects will parse source documents (usually in XML format) and build Javascript objects. Documents to be parsed include: | ||

| + | * Geographic Markup Language (GML) | ||

| + | * Styled Layer Descriptors (SLD) Describes style for GML | ||

| + | * Geographic RSS (GeoRSS) RSS with geographic tags | ||

| + | * Context (The Context is an XML description of the layers on a map, the source of the data, and the style to apply to the layers). | ||

| + | ... | ||

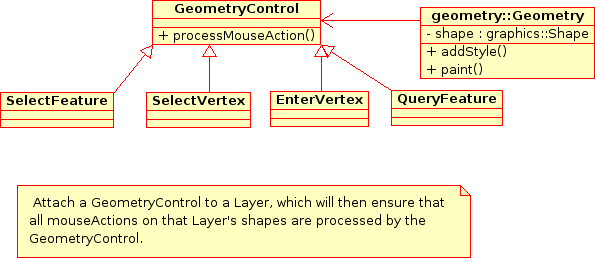

==MouseEvent Processing Class Diagram== | ==MouseEvent Processing Class Diagram== | ||

| − | MouseEvents can be attached to each | + | [[Image:Controls.png]] |

| + | |||

| + | MouseEvents can be attached to each Geometry. This means that we can add functions like: | ||

* Popups when a user hovers over a feature. | * Popups when a user hovers over a feature. | ||

* Select a feature when a user clicks on the feature. | * Select a feature when a user clicks on the feature. | ||

* Select a vertex when a user clicks on a vertex. (This would require a shape to be drawn on each vertex of a line being queried). | * Select a vertex when a user clicks on a vertex. (This would require a shape to be drawn on each vertex of a line being queried). | ||

| − | |||

| − | == | + | |

| + | ==Source UML== | ||

| + | The source UML diagram is stored in the Mapbuilder subversion repository at: | ||

| + | [https://svn.codehaus.org/mapbuilder/trunk/mapbuilder/mapbuilder/design/uml/graphics.xmi https://svn.codehaus.org/mapbuilder/trunk/mapbuilder/mapbuilder/design/uml/graphics.xmi] | ||

| + | It was created using Umbrello on Linux. | ||

==Use Cases== | ==Use Cases== | ||

| Line 39: | Line 98: | ||

# Continuing four: TC wants to change the *displayed position* of a point or a line without changing the underlying data. As cartography, each map's visual appearance communicates information that a particular scale might not permit the raw data to easily reveal. The canonical example was shown at the ESRI UC a few weeks ago: two road segments are distinct, but at a particular scale, the cartographic styling makes them appear so wide that they merge visually. The best known solution to this is to actually move one of the line's pixel position to visually indicate the merger. What is the sequence of steps and what datastructures change? How are the styling choices associated with the chosen scale? | # Continuing four: TC wants to change the *displayed position* of a point or a line without changing the underlying data. As cartography, each map's visual appearance communicates information that a particular scale might not permit the raw data to easily reveal. The canonical example was shown at the ESRI UC a few weeks ago: two road segments are distinct, but at a particular scale, the cartographic styling makes them appear so wide that they merge visually. The best known solution to this is to actually move one of the line's pixel position to visually indicate the merger. What is the sequence of steps and what datastructures change? How are the styling choices associated with the chosen scale? | ||

# After laying out a sequence of points that form a closed polygon, TC wants to know the area and perimeter of the polygon. How does this information get calculated and passed out? | # After laying out a sequence of points that form a closed polygon, TC wants to know the area and perimeter of the polygon. How does this information get calculated and passed out? | ||

| − | # A group of cyclists documents their summer trip in a data set with a long polyline covering four thousand miles miles and various point features with interesting styling and labels. TC wants to *play* the story in an OpenLayers map. By "play," I mean the video analogy of having a stop/play forward/play backward button, fast forward and fast backward buttons, and when the system is in action, the map automatically moves and the popups automatically appear as the map moves along the route at some scale specified in the styling made by the cyclists. What data structures represent the cyclists' details? What sequence of methods cause the movie to play? | + | # A group of cyclists documents their summer trip in a data set with a long polyline covering four thousand miles miles and various point features with interesting styling and labels. TC wants to *play* the story in an OpenLayers map. By "play," I mean the video analogy of having a stop/play forward/play backward button, fast forward and fast backward buttons, and when the system is in action, the map automatically moves and the popups automatically appear as the map moves along the route at some scale specified in the styling made by the cyclists. What data structures represent the cyclists' details? What sequence of methods cause the movie to play? |

| + | # TC gets assigned a task: digitize all the roads in Madagascar using this 60cm satellite imagery. How does this design make that easy? I realize that some of these may get cast out of initial designs as being too advanced for our first pass. However, I think such a choice should be made consciously. | ||

| + | |||

| + | Some additional use cases added by Stephen Woodbridge: | ||

| + | |||

| + | #TC has loaded some polygon data from different sources. He has some some adjacent polygons that he want to share a common edge. He needs to select each polygon and the start and end of the shared edge on each that needs to be snapped together. The first polygon can be the control and the second one gets manipulated. The edge of the second between the start and end gets removed and replaced by points from the first between its start and end marks. | ||

| + | #TC does not like some of the points on the shared edge, he would like to select the two polygons and move some of the points along the shared edge and some that are not shared. After looking at his handiwork and TC would like to undo the last few moves. | ||

| + | #TC thinks it is a bother to keep selecting both polygons to do the manipulation and would prefer to "link them together" along the common edge. | ||

| + | #To some extent this imply using topology, and planning for topology support could be very powerful, but this can also be done by maintaining some additional pointers and tracking and updating their children and avoiding circular references. | ||

| + | |||

| + | = Code Review = | ||

| + | Original Author: Cameron Shorter | ||

| + | |||

| + | == Introduction == | ||

| + | This is a code review of the 2 vector rendering code bases at: | ||

| + | |||

| + | # Paul and Anselm's code at: http://svn.openlayers.org/sandbox/pagameba/vector/lib Design at: http://wiki.osgeo.org/index.php/AJAX_WebMapping_Vector_Rendering_Design | ||

| + | # Bertil and Pierre's code at http://svn.openlayers.org/sandbox/bertil/lib Design below: | ||

| + | http://dev.camptocamp.com/openlayers/graphics/Edition.png | ||

| + | |||

| + | I realise that others have contributed to both code bases, but from now on I shall refer to the two code bases as Paul's code and Bertil's code. | ||

| + | |||

| + | The intent of this review is to make suggestions on how the 2 code bases can be merged into one. | ||

| + | |||

| + | == Summary == | ||

| + | Paul has a good programming style. I like his commenting, use of inheritence, and completeness when it comes filling in all the initialisation/destroy/etc type functions. I'd like to see this in the final code base. At the moment, Paul has working code (old design) and half complete code (new design). | ||

| + | |||

| + | Bertil has working code and a solid design. | ||

| + | |||

| + | What I suggest is that we start from Bertil's code base, and apply patches from Paul's code and a couple of changes to the design. | ||

| + | |||

| + | More detailed comments follow. | ||

| + | |||

| + | == General == | ||

| + | |||

| + | Bertil's code seems to depend upon prototype.js. As I understand it Openlayers have tried to remove the dependance upon prototype so this should be fixed. | ||

| + | |||

| + | == Feature and Geometry == | ||

| + | |||

| + | The concept of Geometry is common between the two designs. | ||

| + | |||

| + | Bertil has coded this at OpenLayers/Feature/Geometry/* , I can't find something similar in Paul's code. | ||

| + | So I propose we use Bertils code for this. | ||

| + | |||

| + | The trunk version of OpenLayers shows a WFS.js class as a child of Feature.js. This doesn't seem right and isn't reflected in either design. We can address this later. | ||

| + | |||

| + | == Layer/Vector == | ||

| + | Both designs have a Layer/Vector class which is roughly the same. | ||

| + | There are parts from both implementations which we will probably want to use. | ||

| + | From Bertil's: | ||

| + | * Bertil has moved the renderer out of Vector.js. Paul creates a canvas in Vector.js (which should be removed) | ||

| + | From Paul's: | ||

| + | * Comments | ||

| + | * A "freeze" concept which prevents painting until unfrozen | ||

| + | * Features are added one feature at a time instead of as an array. I suspect this would have better performance? | ||

| + | |||

| + | == Renderer / Graphics / Sheet == | ||

| + | Bertil's code: | ||

| + | * Has Renderer.js (which probably should be called RendererFactory.js). It creates Svg.js or Vml.js. | ||

| + | * The Svg/Vml Renderers has a switch statement which calls this.drawPoint() this.drawLine() etc. | ||

| + | Paul's code: | ||

| + | * Used to have a similar Renderer concept - but based upon IeCanvas instead of Svg/Vml. (The code is still there) | ||

| + | * Started working on a Graphics concept with an object for Circle, Line, etc. | ||

| + | Cameron's design: | ||

| + | http://wiki.osgeo.org/index.php/AJAX_WebMapping_Vector_Rendering_Design#Code_Review | ||

| + | * I propose that we split the Renderer into two classes. | ||

| + | ** Renderer.js should loop through all features, call the appropriate drawFeature function, with the correct style information. | ||

| + | ** Sheet.js (should be called Canvas, except Mozilla already use the word). Sheet.js contains all the drawLine, drawPoint, drawPolyline etc functions. | ||

| + | ** Sheet.js has children Sheet/Svg.js Sheet/Vml.js etc. | ||

| + | ** SheetFactory is a simple class which returns the correct instaniation of Sheet. | ||

| + | My aim here is to seperate the Cross-browser Graphics from the GIS code. Seperating the Graphics out will make it easier for us to build upon Graphics libraries that will be developed and optimized by other projects. | ||

| + | |||

| + | == Style == | ||

| + | * Bertil has a Style object, but it doesn't seem to be used yet. | ||

| + | * Paul doesn't have a Style object. | ||

| + | In our first cut, we don't need to use style, we can hard code all features as red, then insert the Style object later. | ||

| + | |||

| + | == EventManager / GeometryControl == | ||

| + | * Paul doesn't have a GeometryControl object yet (as per my UML design). | ||

| + | * Bertil's code allows an eventManager to be assigned in the Renderer. I don't understand how this works. Mouse events seem to be passed back to the Map object? Do these events include the id of the feature which triggered the event? How are controls associated with the events? | ||

| + | * Bertil: - The eventManager property of Renderer is a reference to the map event manager. This reference allow the renderer to trigg custum edition events when you click, doubleClick (, etc) on represented shapes. These events contains geometry model reference, so when you catch an event you catch also the concerned geometry. The final goal is to abstractify edition events to manage SVG/VML DOM Events and simulate them in Canvas. In this architecture, edition controls are catching the edition events to edit the geometry features. (sorry for english mistakes, it's not my mother tongue) | ||

| + | * Steven: - I discovered that there's a problem with events on shapes: if you use mouseover events on, for instance, points you quickly get a mouse out event when you drag the thing. Points are so small that it's difficult to keep the point under the mouse all the time. I solved it by increasing the point size during mouseOver/mouseMove events. | ||

Latest revision as of 20:48, 9 November 2006

This design aims to be a summary of ideas from Mapbuilder, OpenLayers, and webmap-dev communities regarding vector rendering design. This is not supposed to be the final design, but rather draft to be used to facilitate further discussions. My hope is that the final version of this design will be used by all the AJAX webmapping clients to make sharing the same code base easier. I'd like to credit Patrice Cappelaere who has SVG/VML rendering working in Mapbuilder and deployed at GeoBliki. This design aims to extend Patrice's code to be more generic and extensible.

Vector Layers Class Diagram

The layer/Vector class is responsible for storing a list of Features and Styles. It calls a Parser to load Features as required (eg for a Web Feature Service), and initiates rendering by calling Renderer.

Simplification of features

As a map is zoomed out, the number of features that needs to be rendering increases significantly - to the point where a browser becomes too slow, or crashes.

A common solution is to use a different FeatureCollection for different zoomLevels. layer/Vector should store the source URL of different layers and the zoolLevels appropriate for each featureCollection.

Comments

- Cameron Shorter: It might be better to move the array of features out of layer/Vector and into a FeatureCollection.

Styling Class Diagram

Renderer is responsible for:

- Creating StyledGeometry for each feature. A StyledGeometry's is determined by parsing the SLD for each Feature.

- Rendering each feature by done by calling Sheet.createGraphic().

There are plenty of opportunities for optimizing rendering, and this logic belongs in the Renderer. Eg:

- Only features being displayed should be renderered. (Filter by bounding box).

Comments

Cameron Shorter: StyledGeometry should probably be merged into Feature. There is a 1:1 relationship between the 2.

Graphics Class Diagram

Sheet and Graphic represents graphic primitives. They deal directly in pixel coordinates and know nothing about the spatial objects they represent.

Sheet represents a vector rendering engine and is responsible for creating shapes.

Graphic represents a graphic shape (line, polygon, circle, etc). It points to a physical shape in the HTML DOM.

Renderer Characteristics

SVG SVG is inbuilt into Firefox 1.5+, ie doesn't require a pluggin. Not provided in IE 6.0. SVG allows mouseEvents to be attached to shapes. 10,000 features takes ~ 40 secs to render. (MSD3 dataset)

VML VML is similar to SVG, provided with IE 6.0 (& earlier?). VML allows mouseEvents to be attached to shapes. 10,000 features takes ~ 8 mins to render.

Canvas Canvas is provided with Firefox 2.5+. Is reportedly faster than SVG. But you cannot attach mouseEvents to features.

WZ GraphicsA library available for older browsers which draws a line by drawing hundreds of colored DIV tags. This is limited by the size of the data it can render. Suitable for ~ 50 lines, ~ 1000 points.

Attaching MouseEvents to Features

This functionality makes it easier to select a feature by clicking on it. The work around (for Canvas) will require a search algorithm to be written in JS to search through all the features.

Rendering Libraries

There are opportunities for collaboration with other projects/libraries in this Graphics package. In particular:

- This Graphics library should be able to stand alone as a graphics rendering library, independant of the Geospatial side of the code. It can then be packaged up and used for other AJAX clients requiring Vector rendering graphics.

- ExplorerCanvas is a cross browser Canvas library. Supports Canvas for firefox and VML. I don't think it supports attaching mouse events to features.

- Dojo has a cross VML/SVG library dojo.gfx . A demo at [1]. They have talk of extending support to other renderers. The dojo project has a strong community behind it and is worth considering.

- http://starkravingfinkle.org/blog/2006/03/svg-in-ie is worth watching too.

Comments

- Jody Garnett: can look into the Go-1 specification for langauge to use. Ie you cannot handle a raw SVG element produced from a Geometry, you will need to decimate it based on the current display DPI. Go-1 provides the idea of a "Graphic" which is produced by processing the Feature+SLD into a Shape+Style. Using SVG (with the feature attirbutes boiled down to some style cues) and the feature id you could have both a nice display visually identical to looking at the complete feature, and enough information to request the feature attribtues when an SVG shape is clicked on. Note: you can build a lazy mapping from ID to a name attributes for tooltip purpose if you need.

Parsers Class Diagram

The Parser objects will parse source documents (usually in XML format) and build Javascript objects. Documents to be parsed include:

- Geographic Markup Language (GML)

- Styled Layer Descriptors (SLD) Describes style for GML

- Geographic RSS (GeoRSS) RSS with geographic tags

- Context (The Context is an XML description of the layers on a map, the source of the data, and the style to apply to the layers).

...

MouseEvent Processing Class Diagram

MouseEvents can be attached to each Geometry. This means that we can add functions like:

- Popups when a user hovers over a feature.

- Select a feature when a user clicks on the feature.

- Select a vertex when a user clicks on a vertex. (This would require a shape to be drawn on each vertex of a line being queried).

Source UML

The source UML diagram is stored in the Mapbuilder subversion repository at: https://svn.codehaus.org/mapbuilder/trunk/mapbuilder/mapbuilder/design/uml/graphics.xmi It was created using Umbrello on Linux.

Use Cases

John Frank did an excellent job outlining the major use cases for Geospatial Vector Rendering:

Better vector data support in OpenLayers will help many of us. I am totally in favor of it. To reality check the proposed design, I'd like to see a few use cases sketched out. Here are a few that seem important to me. Each use case has two components: a user interface challenge and a datastructure challenge. There will typically be many solutions to the user interface challenge; I'm suggesting we think of at least one UI solution to check the proposed nouns and verbs. The canonical "user" is always a very Tough Customer, so I use that as though it were a person's name, and their initials are TC

- An open polyline and also a point are rendered on the screen. TC wants to *add* the point to the polyline to increase its depiction of some underlying reality. How does TC specify where in the line to add the point, and how do these classes handle that insertion? Logically, the input needs to specify which existing segment of the polyline to break and replace with two new line segments.

- Continuing one: TC wants to move an existing point in a polyline...

- TC constructs a sequence of points by clicking in the map, and wants to see those points connected together in a polyline. Naturally, TC will construct a self-intersecting path. What is the sequence of method calls?

- After loading several vector data layers from various sources, TC wants to merge ten different features together into one "thing" that can be stored separately. How does she do that? What happens in the code? How does it keep track of where the data came from originally?

- After loading a vector data layer that may or may not have come with styling information, TC wants to change the styling information used in presenting that data on her map. Using some eye candy on the screen, she can input some choices, and then: what are the sequence of actions under the hood?

- Continuing four: TC wants to change the *displayed position* of a point or a line without changing the underlying data. As cartography, each map's visual appearance communicates information that a particular scale might not permit the raw data to easily reveal. The canonical example was shown at the ESRI UC a few weeks ago: two road segments are distinct, but at a particular scale, the cartographic styling makes them appear so wide that they merge visually. The best known solution to this is to actually move one of the line's pixel position to visually indicate the merger. What is the sequence of steps and what datastructures change? How are the styling choices associated with the chosen scale?

- After laying out a sequence of points that form a closed polygon, TC wants to know the area and perimeter of the polygon. How does this information get calculated and passed out?

- A group of cyclists documents their summer trip in a data set with a long polyline covering four thousand miles miles and various point features with interesting styling and labels. TC wants to *play* the story in an OpenLayers map. By "play," I mean the video analogy of having a stop/play forward/play backward button, fast forward and fast backward buttons, and when the system is in action, the map automatically moves and the popups automatically appear as the map moves along the route at some scale specified in the styling made by the cyclists. What data structures represent the cyclists' details? What sequence of methods cause the movie to play?

- TC gets assigned a task: digitize all the roads in Madagascar using this 60cm satellite imagery. How does this design make that easy? I realize that some of these may get cast out of initial designs as being too advanced for our first pass. However, I think such a choice should be made consciously.

Some additional use cases added by Stephen Woodbridge:

- TC has loaded some polygon data from different sources. He has some some adjacent polygons that he want to share a common edge. He needs to select each polygon and the start and end of the shared edge on each that needs to be snapped together. The first polygon can be the control and the second one gets manipulated. The edge of the second between the start and end gets removed and replaced by points from the first between its start and end marks.

- TC does not like some of the points on the shared edge, he would like to select the two polygons and move some of the points along the shared edge and some that are not shared. After looking at his handiwork and TC would like to undo the last few moves.

- TC thinks it is a bother to keep selecting both polygons to do the manipulation and would prefer to "link them together" along the common edge.

- To some extent this imply using topology, and planning for topology support could be very powerful, but this can also be done by maintaining some additional pointers and tracking and updating their children and avoiding circular references.

Code Review

Original Author: Cameron Shorter

Introduction

This is a code review of the 2 vector rendering code bases at:

- Paul and Anselm's code at: http://svn.openlayers.org/sandbox/pagameba/vector/lib Design at: http://wiki.osgeo.org/index.php/AJAX_WebMapping_Vector_Rendering_Design

- Bertil and Pierre's code at http://svn.openlayers.org/sandbox/bertil/lib Design below:

http://dev.camptocamp.com/openlayers/graphics/Edition.png

I realise that others have contributed to both code bases, but from now on I shall refer to the two code bases as Paul's code and Bertil's code.

The intent of this review is to make suggestions on how the 2 code bases can be merged into one.

Summary

Paul has a good programming style. I like his commenting, use of inheritence, and completeness when it comes filling in all the initialisation/destroy/etc type functions. I'd like to see this in the final code base. At the moment, Paul has working code (old design) and half complete code (new design).

Bertil has working code and a solid design.

What I suggest is that we start from Bertil's code base, and apply patches from Paul's code and a couple of changes to the design.

More detailed comments follow.

General

Bertil's code seems to depend upon prototype.js. As I understand it Openlayers have tried to remove the dependance upon prototype so this should be fixed.

Feature and Geometry

The concept of Geometry is common between the two designs.

Bertil has coded this at OpenLayers/Feature/Geometry/* , I can't find something similar in Paul's code. So I propose we use Bertils code for this.

The trunk version of OpenLayers shows a WFS.js class as a child of Feature.js. This doesn't seem right and isn't reflected in either design. We can address this later.

Layer/Vector

Both designs have a Layer/Vector class which is roughly the same. There are parts from both implementations which we will probably want to use. From Bertil's:

- Bertil has moved the renderer out of Vector.js. Paul creates a canvas in Vector.js (which should be removed)

From Paul's:

- Comments

- A "freeze" concept which prevents painting until unfrozen

- Features are added one feature at a time instead of as an array. I suspect this would have better performance?

Renderer / Graphics / Sheet

Bertil's code:

- Has Renderer.js (which probably should be called RendererFactory.js). It creates Svg.js or Vml.js.

- The Svg/Vml Renderers has a switch statement which calls this.drawPoint() this.drawLine() etc.

Paul's code:

- Used to have a similar Renderer concept - but based upon IeCanvas instead of Svg/Vml. (The code is still there)

- Started working on a Graphics concept with an object for Circle, Line, etc.

Cameron's design: http://wiki.osgeo.org/index.php/AJAX_WebMapping_Vector_Rendering_Design#Code_Review

- I propose that we split the Renderer into two classes.

- Renderer.js should loop through all features, call the appropriate drawFeature function, with the correct style information.

- Sheet.js (should be called Canvas, except Mozilla already use the word). Sheet.js contains all the drawLine, drawPoint, drawPolyline etc functions.

- Sheet.js has children Sheet/Svg.js Sheet/Vml.js etc.

- SheetFactory is a simple class which returns the correct instaniation of Sheet.

My aim here is to seperate the Cross-browser Graphics from the GIS code. Seperating the Graphics out will make it easier for us to build upon Graphics libraries that will be developed and optimized by other projects.

Style

- Bertil has a Style object, but it doesn't seem to be used yet.

- Paul doesn't have a Style object.

In our first cut, we don't need to use style, we can hard code all features as red, then insert the Style object later.

EventManager / GeometryControl

- Paul doesn't have a GeometryControl object yet (as per my UML design).

- Bertil's code allows an eventManager to be assigned in the Renderer. I don't understand how this works. Mouse events seem to be passed back to the Map object? Do these events include the id of the feature which triggered the event? How are controls associated with the events?

- Bertil: - The eventManager property of Renderer is a reference to the map event manager. This reference allow the renderer to trigg custum edition events when you click, doubleClick (, etc) on represented shapes. These events contains geometry model reference, so when you catch an event you catch also the concerned geometry. The final goal is to abstractify edition events to manage SVG/VML DOM Events and simulate them in Canvas. In this architecture, edition controls are catching the edition events to edit the geometry features. (sorry for english mistakes, it's not my mother tongue)

- Steven: - I discovered that there's a problem with events on shapes: if you use mouseover events on, for instance, points you quickly get a mouse out event when you drag the thing. Points are so small that it's difficult to keep the point under the mouse all the time. I solved it by increasing the point size during mouseOver/mouseMove events.